ここからコンテンツです。

Human Pose Estimation for Care Robots Using Deep Learning

Efficient generation method of big data for pose estimation By Jun Miura

A research group led by Professor Jun Miura has developed a method to estimate various poses using deep learning with depth data alone. Although it requires a large volume of data, the group has realized a technology which efficiently generates data using computer graphics and motion capture technologies. This data is freely available, and expected to contribute to the progress of research across a wide range of related fields.

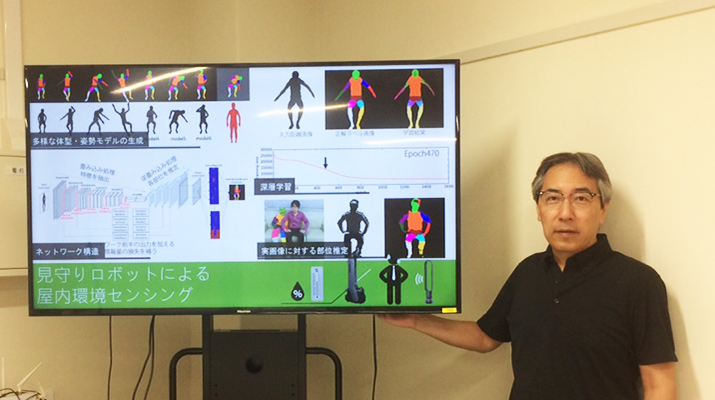

Given the backdrop of declining birthrates, an aging population, and a lack of nursing or care staff, there is an increasing expectation that care robots will be required to meet society’s needs. It is anticipated, for example, that robots will be used to check the condition of the residents while patrolling nursing homes and other such facilities. When evaluating a person’s condition, while an initial estimation of the pose (standing, sitting, fallen, etc.) is useful, most methods to date have utilized images. These methods face challenges such as privacy issues, and difficulties concerning application within darkly lit spaces. As such, the research group (Kaichiro Nishi, a 2016 master's program graduate, and Professor Miura) has developed a method of pose recognition using depth data alone (Fig.1).

Left: Experiment scene (this image is not used for estimation), Center: Depth data corresponding to the extracted person region, Right: Estimation result (the colors correspond to each part of the body

For poses such as upright positions and sitting positions, where body parts are able to be recognized relatively easily, methods and instruments which can estimate poses with high precision are available. In the case of care, however, it is necessary to recognize various poses, such as a recumbent position (the state of lying down) and a crouching position, which has posed a challenge up until now. Along with the recent progress of deep learning (a technique using a multistage neural network), the development of a method to estimate complex poses using images is advancing. Although deep learning requires preparation of a large amount of training data, in the case of image data, it is relatively easy for a person to see each part in an image and identify it, with some datasets also having been made open to the public. In the case of depth data, however, it is difficult to see the boundaries of parts, making it difficult to generate training data.

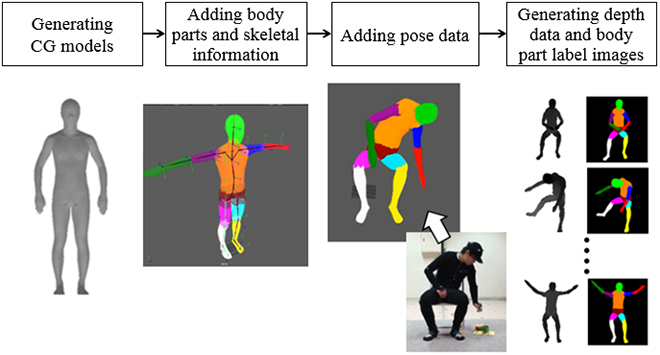

As such, this research has established a method to generate a large amount of training data by combining computer graphics (CG) technology and motion capture technology (Fig. 2). This method first creates CG data of various body shapes. Next, it adds to the data information of each part (11 parts including a head part, a torso part, and a right upper arm part), and skeleton information including each joint position. This makes it possible to make CG models take arbitrary poses simply by giving the joint angles using a motion capture system. Fig.3 shows an example of generating data for various sitting poses.

By using this developed method, training data can be generated corresponding to a combination of persons with arbitrary body shapes, and arbitrary poses. So far, we have created and released a total of about 100,000 pieces of data, both for sitting positions (with/without occlusions), and for several poses in a recumbent positions. This data is freely available for research purposes (http://www.aisl.cs.tut.ac.jp/database_HDIBPL.html). In the future, we will release human models and detailed procedures for data generation so that everyone can make data easily by using them. We hope that this will contribute to the progress of the related fields.

The result of this research was published in Pattern Recognition on June 3, 2017.

This research was partially supported by JSPS Kakenhi (Grants-in-Aid for Scientific Research) No. 25280093.

Reference

Kaichiro Nishi and Jun Miura (2017). Generation of human depth images with body part labels for complex human pose recognition, Pattern Recognition,

http://dx.doi.org/10.1016/j.patcog.2017.06.006

見守りロボットのための深層学習を用いた人物姿勢推定

―姿勢推定ビッグデータの効率的生成法―豊橋技術科学大学の情報・知能工学系 三浦純教授らの研究グループは、人物のさまざまな姿勢を距離データのみから深層学習を用いて推定する方法を開発しました。深層学習では大量の学習データを必要としますが、コンピュータグラフィクス技術とモーションキャプチャ技術を用いて効率的にデータを生成する技術を実現しています。作成したデータは一般に公開しており、広く関連分野の研究の進展に資することを期待しています。

少子高齢化や介護人材不足などを背景に、ロボットによる見守りへの期待が高まっています。例えば、介護施設等での見守りでは、ロボットが施設内を巡回しながら入居者の状態を見て回ることが想定されます。人の状態を知るためには、まず姿勢の推定(立っている、座っている、倒れている等)が有用ですが、これまでの方法は画像を用いるものがほとんどでした。しかし、画像を用いる方法ではプライバシーの問題や暗いところでは適用が難しい、といった課題があります。そこで、研究グループ(平成28年度博士前期課程修了生 西佳一郎および三浦教授)は、距離データのみを用いて姿勢認識を行う手法を開発しました(図1)。

立位や座位など、体の各部位が比較的わかりやすい姿勢に対しては、姿勢を高精度に推定する手法や機器はありますが、見守りでは臥位(横になった状態)やうずくまっている状態などさまざまな姿勢を認識する必要があり、これまで難しい問題でした。近年の深層学習(多段のニューラルネットワークを利用する手法)の進展に伴い、画像を用いて複雑な姿勢の推定を行う手法の開発が進んでいます。深層学習のためには大量の学習データを準備する必要がありますが、画像データの場合には画像から各部位を人が見て特定することは比較的容易で、公開されているデータセットもいくつか存在します。しかし、距離データの場合には部位の境界が見にくく、学習データの作成は簡単ではありません。

そこで、本研究ではコンピュータグラフィクス(CG)技術とモーションキャプチャ技術を組み合わせて大量の学習データを生成する手法を確立しました(図2)。この手法ではまず、さまざまな体型のCGデータを作成します。次にこのデータに各部位の情報(頭部、胴部、右上腕部など11の部位)と、各関節位置を含む骨格情報を付加します。これにより、関節角度を与えるだけでCGモデルに任意の姿勢を取らせることが可能になります。図3にさまざまな座位姿勢に対しデータを生成した例を示します。

開発した手法を用いると、任意の体型の人物と任意の姿勢の組み合わせに対し学習データを生成することができます。現在、座位(隠蔽なし・あり)と臥位のいくつかの姿勢に対し総計10万程度のデータを作成、公開しており、研究目的であれば自由に利用可能です(http://www.aisl.cs.tut.ac.jp/database_HDIBPL.html)。今後はデータ作成のための人物モデルおよびその詳細な構築手順も含めて公開することで誰もが簡単にデータを作成できるようにし、当該分野の発展に貢献できればと考えています。

本研究成果は、平成29年6月3日にPattern Recognition誌上に掲載されました。

本研究の一部は科研費25280093の支援を受けました。

Researcher Profile

| Name | Jun Miura |

|---|---|

| Affiliation | Department of Computer Science and Engineering |

| Title | Professor |

| Fields of Research | Intelligent Robotics / Robot Vision / Artificial Intelligence |

ここでコンテンツ終わりです。