ここからコンテンツです。

Mastering Data Science - semantic understanding of multifarious big data and its social implementation

Masaki Aono

Nowadays it is common for large amounts of data to being stored and used on the internet via SNS and other online data repositories. Data science technology, including information retrieval and data mining (which allows you to search and extract latent but potentially important pieces of information from the data), is vital in an era flooded by so-called "big data". Professor Masaki Aono has been a leading figure in knowledge data engineering research since the 1980’s, and it is his goal to keep conducting challenging research in the area of data science. Professor Aono started with 3D shape retrieval, gradually incorporating various popular state-of-the-art technologies ranging from multivariate data analysis and traditional machine learning to deep learning.

Interview and report by Madoka Tainaka

World Champions in a Plant Identifying Contest

In 2016, a research team led by Professor Masaki Aono won first place for identification accuracy in an international contest called “PlantCLEF2016”. PlantCLEF2016 is a competition where participants use image processing technology to automatically classify 1,000 plants from photographic images.

CREDIT: Copyright © PlantCLEF 2016

Professor Aono tells us "actually our research team has been participating in the international image annotation competition, ImageCLEF since 2012. The focus of this year’s PlantCLEF contest was on plants. You had to guess each plant’s name based on some crowdsourced photos, but it’s quite a difficult task. For example, some photos include a human hand or a tripod, meaning that the data given to participants naturally includes "noise" completely unrelated to plants. Furthermore, the photos could be closeups, very tiny or even out of focus. I guess the reason we could come home with the first prize is that we were able to come up with a way of extracting the ‘features’ from metadata which helped us to gain more correct answers in spite of the formidable task."

As the amount of data used in the contests is increasing, the research lab has been developing their hand-engineered GPU (Graphics Processing Unit) machines every year, and this time they incorporated deep learning to exploit the full capacity of the GPU machines. In fact, deep learning is a technology that has been gaining attention in the field of AI.

However, the victory by Professor Aono’s team does not only come down to deep learning.

"In 2014, we also won the first prize at the ImageCLEF I mentioned earlier, but that time we used ontology and traditional machine learning rather than deep learning. Ontology is a hierarchical system that explicitly expresses a concept and systematically describes the relationship between the concepts. In other words, it is a vocabulary set that the computer uses to successfully orchestrate texts and images. By taking advantage of ontology, we managed to achieve a high level of accurate annotation to images, while other teams using deep learning could not." the professor explains.

The Key is How to Combine Features

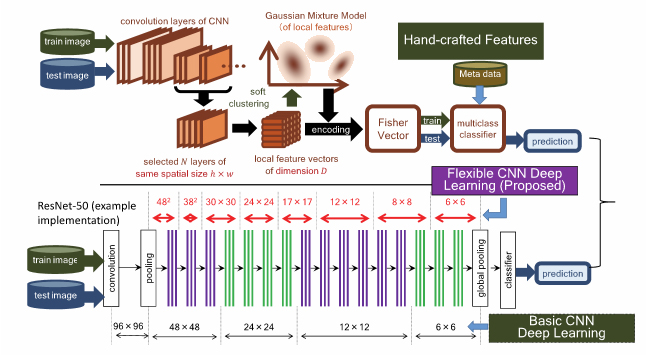

Professor Aono says that their team’s strength lies in their ‘feature combination technology’. A feature is a numerical expression of the characteristics or attributes observed in the target data. Even in ontology, it is crucial to configure the feature correctly. Up until now, the feature was extracted manually, which is called “hand-crafted features”, but with the increase of the amount of data the team began to adopt deep learning to complement the proposed features.

"We came up with a new method for deep learning as well. Out of the 100,000 photos in the training data, we gave the computer various types of images – images that were out of focus, images that were zoomed in on different parts – so that it would be able to deal with any kind of images. We also incorporated the date and time stamps that were added to the data, which were allowed to be used as metadata. From this we could infer that images taken at almost the same time were most likely to be of the same plant. In the contest in 2016, somehow no other teams tried to use this metadata. In fact, ironically for the other teams, it was the use of this metadata that improved our performance and made it possible for us to become world champions."

"Regarding an underlying technology which aids in the extraction of features in deep learning called convolution, the team came up with some new ideas to change the internal structure, which gave them another advantage over the other teams. The credit for this goes to a clever exchange (2nd year masters) student from Malaysia. The results were even featured in Springer’s international journal ‘Multimedia Tools and Applications’."

Setting yourself apart is an important task in the competitive world of data engineering processing research. You especially cannot hope to compete with big companies such as Google, Facebook and other IT companies that have access to enormous amounts of data and resources for their research development. This is the reason Professor Aono first chose to work with the 3D shapes retrieval which, as a less popular research domain has not been substantially focused on by big companies but is yet manageable in terms of difficulty.

"Three-dimensional CAD (Computer-Aided Design) is being used in a variety of situations, from the design of machine parts to architectural design, to CG creation, but currently time and skill are two crucial elements in creating the underlying model for the geometric shapes. We developed technology that uses an existing database of 3D shapes to find models that are similar to 2D images or 3D shapes acquired by using commercially available motion sensors such as Kinect or sketches with high precision. If you can search for similar models, there is no need to configure the model from scratch which improves the efficiency and means that even novices can design complicated parts using CAD."

This system’s retrieval is currently the world’s most accurate (amongst the methods of searching similar 3D shapes with no prior supervised learning) and Professor Aono tells us he is in the middle of applying for several new patents, for some of which the technology is being transferred to companies that collaborated in the research.

I want to put my research results to good use in the real world, such as self-driving cars and agriculture.

In addition, Professor Aono is also working on research that gathers chronological data that can be collected from different types of sensors, and predicts what may happen by learning in advance.

"For example, I am conducting research into controlling the environment of a greenhouse to maximize the amount of vegetables and fruits that can be harvested by equipping the greenhouse with sensors that measure humidity, moisture, sunlight, CO2 etc., then wirelessly collecting and analyzing the data. In the past, I conducted demonstration experiments on greenhouses used to suggest the best control over greenhouse environment in order to maximize the yield of tomatoes."

In regard to the processing of chronological data, the professor is also pursuing research into BCI (Brain Computer Interface), using data from the human brain to predict a person’s psychological state or to allow for communication with a computer simply by thinking. He is also beginning research that uses LSTM (Long Short-Term Memory), a type of deep learning, where both long and short-term chronological data can be handled. This technique can be used, for example, to guess an author of a book from a piece of writing, or automatically create a piece of writing in the style of a specific author.

On the other hand, his research on scene graphs for automatically adding notes to an image is also interesting. A scene graph is a method of displaying the relationship either between two objects or between an object and the attribute, with a so-called 'directed graph structure' (a graph composed of nodes and directed edges).

"For example, if we can automatically extract objects in an image such as roads, trees, cars, the sky etc. and add notes about the relationship, it would become possible for the computer to answer questions such as what kind of trees there are, whether it’s sunny, if there are any obstructions in front of us, etc.

If we continue to progress with this research, we could use it in car navigation systems to give commands such as ‘turn right at the brick building in front of you’. If we could add notes to the constantly changing scenery, I think it may help develop self-driving cars in the future,” says Professor Aono.

"I’m troubled by the amount of things I want to do," adds the professor. His research includes a number of different topics, all of which are building blocks critical to the development of increasingly influential field of AI. No doubt the Professor will continue to contribute actively to this effort in the future.

Reporter's Note

Professor Aono started working at IBM after graduating from Tokyo University with a Master’s degree in CG and CAD. From the late 1990’s he started looking at information retrieval. He became a professor at Toyohashi University of Technology in 2003, and accelerated his research. “I want to construct a system that can correctly identify things even better than a human specialist.” That is the motivation behind his research.

The Professor feels that he gets his love of nature, including an obsession with classification, from his father, who was the former curator of the Kurashiki Museum of National History in Okayama Prefecture. On his days off he enjoys taking photos of flowers and birds. Recently he succeeded in taking a photo of a red-flanked bluetail, a photo he had been chasing after for a long time. "One day I would like to try and use data processing to identify birds and insects." The professor has an endless curiosity. Professor Aono’s ‘search for a bluebird’ continues inside his research.

3Dや画像、映像、テキストなど、多種多様なデータ処理を究める

近年、SNSやオンラインのデータレポジトリなどWeb上に、多種多様かつ大量のデジタルデータが蓄積され、利用されている。そのデータ活用に欠かせないのが、情報検索やデータから有用な情報を取り出すデータマイニングなどの処理技術だ。青野雅樹教授は、1980年代からこうした知的なデータ処理分野の研究を手がけてきた第一人者である。三次元の形状類似検索をはじめ、画像、映像、テキストと、多種多様なデータを対象に、深層学習などの先端技術も取り入れながらデータ処理の精度向上を目指している。

植物鑑定のコンテストで世界一に

2016年、青野雅樹教授が率いる研究チームが、"PlantCLEF2016"と呼ばれる国際コンテストで世界第1位の鑑定精度を獲得した。PlantCLEF2016では、1000種類の植物の写真画像から、画像処理技術などを使って種類を自動で鑑定するコンテストだ。

「実は、我々のチームは2012年からImageCLEFという画像検索の国際コンテストに参加していて、今回のPlantCLEFはその中でも植物に特化したコンテストです。クラウドソーシングで集められた写真から植物の名前を当てるのですが、けっこう難しい課題なんですよ。たとえば、植物とともに人間の手や脚が写っている写真や、樹肌のアップ、あるいは遠景写真なども混ざっています。コンテストで我々がトップになったのは、"特徴量"の抽出の仕方に工夫を凝らすことで、過酷なタスクに対しても正答率を上げることができたからだと思います」と青野教授は説明する。

年々、こうしたコンテストで扱うデータ量は増大しているため、研究室では手作りのGPU(Graphics Processing Unit)マシンを年々増やし、今回はさらに、近年、AIの分野で注目されている深層学習も取り入れた。ただし、青野教授チームの勝利は、深層学習だけによるものではない。

「2014年に、先のImageCLEFでも我々は世界1位を獲得しているのですが、このときは深層学習ではなく、オントロジーを使った、通常の機械学習を採用しました。オントロジーというのは、概念を明示的に表現し、それらの関係を体系的に記述した階層的な概念体系のこと。つまり、コンピュータがテキストや画像をうまく処理できるようにするための語彙のセットのことです。これをうまく使うことで、深層学習を使った他チームよりも高精度を達成しました」と青野教授は言う。

肝となるのは特徴量をいかに組み合わせるか

青野教授は、自らのチームの強みを「特徴量の組み合の技」にあると言う。特徴量とは、学習データにどのような特徴があるのかを数値化したもののこと。オントロジーを使う場合でも、そこから特徴量をうまく設計することが肝要になる。従来、この特徴量の抽出は手作業で行っていたが、データ量の増大に伴い、特徴量を自動で抽出するために、ここへきて深層学習を採用し始めた。

「深層学習にも工夫を凝らしました。10万枚ほどの学習用の訓練データの中にはピンボケ写真や部分のアップなどさまざまなものを入れて、どんな画像にも対応できるようにしました。また、メタデータとして利用が許可されていた、データに付与された日時も取り入れました。ほぼ同時刻に撮られた画像は同じ植物だろうと推定するわけです。2016年当時のコンテストでは他のチームは、このメタデータを利用しようとしませんでした。実は、他のチームにとっては皮肉なことに、このメタデータの利用が性能をかなり押し上げ、我々のチームが世界一になったようです(笑)。

さらに、深層学習において特徴量の抽出に役立つ畳み込みという手法についても、その中身の構造を変える新しいアイデアを生み出して、他チームとの差別化を図りました。このアイデアには、当時、修士2 年だった優秀なマレーシア人の留学生が大活躍しました。この成果は、Springer社の"Multimedia Tools and Applications"という国際ジャーナルにも採択されました」

競争の激しいデータ処理研究の分野で、差別化は重要な課題だ。とくに膨大なデータと資産を背景に研究開発を進めているGoogleやFacebookなどのIT企業と真っ向から勝負を挑んでも太刀打ちできない。そうしたことから、青野教授が最初に手がけたのは、難しいがゆえにあまり研究されていない三次元の形状類似検索だった。

「現在、機械部品の設計をはじめ、建築設計やCGの制作なの現場など、さまざまな場面で三次元CAD(Computer-Aided Design)が使われていますが、そうした幾何学形状のモデルをゼロから制作するには、手間と熟練の技が欠かせません。そこで、既存の三次元形状モデルを蓄積したデータベースから、Kinectなど市販のモーションセンサデバイスを使って得た3D画像や形状モデル、スケッチから似たモデルを高精度で検索する技術を開発しました。似た形状モデルが検索できれば、ゼロから設計する必要がなくなり、効率化が図れるうえに、新人でもCADで複雑な部品の設計ができるようになります」

このシステムの検索精度は現時点で(事前学習を要しない3D形状類似検索手法で)世界一を誇り、新たないくつかの特許を出願中で、その一部は共同研究先の企業に技術移転中だという。

研究成果を自動運転や農業など、実社会に役立てたい

その他にも青野教授は、各種センサから得られる時系列データを収集して、事前に学習することで、これから起こり得る事象を予測する研究にも注力している。「たとえば、温室内に温度や水分、日射量、CO2などを測るセンサを配備し、無線でデータを集めて解析し、温室内の野菜や果物の収穫量が最大になるように温室の環境を制御する研究も行っています。これまで、トマトの温室栽培で実証実験をしたことがあります」

時系列データの処理に関しては、ヒトの脳のデータを計測して、どのような心理状態にあるのかの予測や、考えただけでコンピュータとコミュニケーションができるBCI(Brain Computer Interface)の研究も手がける。さらに、深層学習の一つであるLSTM(long-short Term Memory)を使って、長期の時系列データの学習に役立てることで、例えば文章から作家を推定したり、特定の作家風の文章を自動で生成したりといった研究も始めている。

一方、与えられた画像に自動で注釈をつけるシーングラフに関する研究も面白い。シーングラフとは、対象物間の関係を、木構造と呼ばれるグラフ(点と辺で構成される図形)で示す手法である。「たとえば、画像に写っている道や木、車、空といった対象物を自動で抽出して、その関係性に注釈をつけることができれば、これは何の木なのか、今日は晴れているのかどうか、目の前に障害物がないかどうかといったQ&Aに答えることが可能になります。

さらにこの研究を進めることができれば、『正面のレンガの建物があるT字路で右折してください』といったように、カーナビに使うこともできるでしょう。時事刻々と変化する風景に応じて注釈をつけることができれば、将来的には自動運転にも役立てられるのではないかと考えています」と青野教授。

「やりたいことが多くて困ってしまいますね」と青野教授が言うように研究は多岐にわたるが、いずれも、現在、社会を大きく変えるとして注目されるAIの進展に欠かせない基盤技術である。今後のさらなる成果に期待したい。

(取材・文=田井中麻都佳)

取材後記

青野教授は、大学卒業後、IBMに就職し、CGやCADの研究を経て、1990年代後半から情報検索の研究を手がけている。2003年から豊橋技科大の教授となり、研究を加速させてきた。「専門家でも見分けるのが難しいようなものを、正確に言い当てるシステムを構築したい」というのが研究のモチベーションだ。

実は、お父様は岡山県倉敷市の自然史博物館の元館長さんで、鑑定へのこだわりや自然への愛着は父親譲りだという。休日は、草花や鳥の写真撮影を楽しむ。先日、長年追い求めていた青い鳥「ルリビタキ」の撮影に成功した。「いずれ、データ処理で鳥や虫の鑑定もやってみたいですね」と、探究心は尽きない。青野教授の研究における「青い鳥」探しはまだまだ続く。

Researcher Profile

Dr. Masaki Aono

Dr. Masaki Aono received the BS and MS degrees from the Department of Information Science from the University of Tokyo, the PhD degree from the Department of Computer Science at Rensselaer Polytechnic Institute, New York. He was with the IBM Tokyo Research Laboratory from 1984 to 2003. In 2002 and 2003, he was a visiting teacher at Waseda University. He is currently a professor at the Graduate School of Computer Science and Engineering Department, Toyohashi University of Technology.

Reporter Profile

Madoka Tainaka is a freelance editor, writer and interpreter. She graduated in Law from Chuo University, Japan. She served as a chief editor of “Nature Interface” magazine, a committee for the promotion of Information and Science Technology at MEXT (Ministry of Education, Culture, Sports, Science and Technology).

ここでコンテンツ終わりです。